This tutorial teaches how to create a lexicon using a weighted finite state transducer (WFST), and uses Kyfd to perform word segmentation using that lexicon.

This tutorial is intended to be run on linux, and you must have Kyfd and OpenFst installed. Check to make sure they exist by running the following commands:

$ kyfd --version $ fstcompose --help

If both give you output, then you have the necessary software installed. It would probably be a good idea to familiarize yourself with WFSTs and OpenFst if you haven't already. Next, download the data for this tutorial:

Decompress it and check the contents, and move into the directory:

$ tar -xzf tut1.tar.gz

$ tree tut1

tut1

|-- config

| `-- tut1.xml

|-- data

| |-- test1.txt

| `-- train.txt

`-- script

|-- makelexicon.pl

|-- makesymbol.pl

|-- replacew.pl

|-- separatechars.pl

`-- wordvocab.pl

$ cd tut1

Now, we will make a finite state transducer to represent a lexicon. A lexicon takes character tokens as input, and outputs word tokens. We will use the data in the file train.txt to make the lexicon, so let's first take a peek at its contents:

$ head -3 data/train.txt english is a west germanic language that developed in england during the anglo-saxon era . as a result of the military , economic , scientific , political , and cultural influence of the british empire during the 18th , 19th , and early 20th centuries and of the united states since the mid 20th century , it has become the lingua franca in many parts of the world . it is used extensively as a second language and as an official language in commonwealth countries and many international organizations .

As you might have guessed from the content, this file contains part of the Wikipedia article on the English Language, processed to lower-case with punctuation split from words. The first thing we'll do to process this article is to make a list of all the unique words in this file. There is a program in the script directory that will do this for us, so let's run it and check the results:

$ script/wordvocab.pl < data/train.txt > word.vocab $ head word.vocab " ' ( ) *karo *surgo , -dom -heit -hood

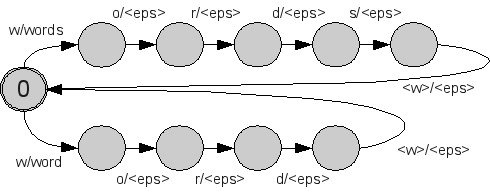

We can see that we now have a list of all the words in train.txt sorted in alphabetical order. Using this file we'll make a lexicon that looks something like the following:

As you can see, the lexicon takes on character at a time as input, and outputs a whole word at once. The top part accepts the characters "w o r d s <w>" and outputs "words", and the bottom part accepts the characters "w o r d <w>" and outputs "word." If you're not so familiar with WFSTs, you probably were confused by the two bizarre looking characters "<eps>" and "<w>:"

A program makelexicon.pl is included in the script directory, so let's try to run it

script/makelexicon.pl < word.vocab > lexicon.txt

and take a look at the results for the words "word" and "words":

... 0 7726 w word 1 7726 7727 o <eps> 7727 7728 r <eps> 7728 7729 d <eps> 7729 0 <w> <eps> 0 7730 w words 1 7730 7731 o <eps> 7731 7732 r <eps> 7732 7733 d <eps> 7733 7734 s <eps> 7734 0 <w> <eps> ...

As we can see, we now have a file that represents the lexicon described in the diagram above. You may also notice that we have added a weight of 1 to the first transition of every lexicon entry. This is a way to count the number of words that the lexicon has produced, and will come in handy later when we're decoding.

One of the great things about WFSTs is that it is possible to determinize and minimize them using standard algorithms, allowing for efficient search. In this section we will use OpenFst's tools to create an optimized FST from our current, unnecessarily large FST file.

First we need to compile the text file into binary FST format. To do so, we first need to make files that map symbol strings into the integers that will represent them in the binary file. The script makesymbol.pl will do this for us, so let's run it and check the results.

$ script/makesymbol.pl -input lexicon.txt > char.sym $ head char.sym <eps> 0 <s> 1 <unk> 2 <w> 3 " 4 % 5 ' 6 ( 7 ) 8 * 9 $ script/makesymbol.pl -output lexicon.txt > word.sym $ head word.sym <eps> 0 <s> 1 <unk> 2 <w> 3 " 4 ' 5 ( 6 ) 7 *karo 8 *surgo 9

We can see that char.sym now contains a mapping from characters to integers, and word.sym contains a mapping from words to integers. The first four symbols are special symbols representing the empty string, sentence terminal, unknown words, and word terminals respectively. With these symbol files, we will now use OpenFst to compile our model.

$ fstcompile --isymbols=char.sym --osymbols=word.sym lexicon.txt lexicon.cmp

Now that we have our compiled binary file, we can use OpenFst to determinize, minimize, and sort our model:

$ fstdeterminize lexicon.cmp lexicon.det $ fstminimize lexicon.det lexicon.min $ fstarcsort lexicon.min lexicon.srt

It may not be immediately obvious why these operations are necessary, so let's take a look at the file sizes of the FSTs before and after determinization and minimization:

$ ls -l lexicon.cmp lexicon.srt -rw-r--r-- 1 neubig neubig 238170 2009-09-05 20:50 lexicon.cmp -rw-r--r-- 1 neubig neubig 72998 2009-09-05 20:53 lexicon.srt

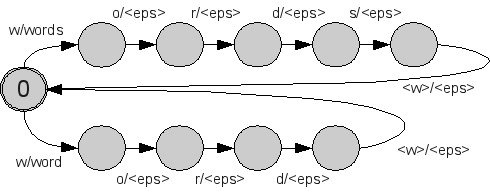

We can see that after minimization, the lexicon is less than 1/3 of its previous size! Because small FSTs save memory, and are generally faster to decode, this is definitely a good thing. Further, let's print the minimized FST to readable form and take a look at the transitions leading to the words "word" and "words":

$ fstprint --isymbols=char.sym --osymbols=word.sym lexicon.srt > lexicon.srt.txt 0 34 w <eps> 1 34 239 o <eps> 239 684 r <eps> 684 1104 d <eps> 1104 0 <w> word 1104 1 s words 1 0 <w> <eps>

Expressed graphically, these states look like this:

Those of you who are familiar with data structures or natural language processing might recognize this as a Trie. While the previous graph had one arc for each character in each word, identical characters at the beginning of words now share their arcs. This greatly reduces size and complexity of the tranducer.

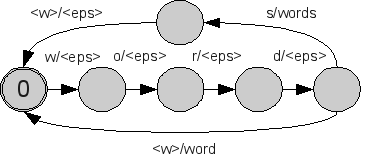

We now have an FST in the form we desire, but there is one more thing we have to do before we decode. With <w> symbols remaining in the FST, we would have to manually specify the word boundaries by inserting the <w> symbol after every word, which would defeat the purpose of having a lexicon in the first place. In order to fix this problem, we replace <w> symbols with <eps> symbols, which can be followed regardless of input.

To do this, we use the tool fstrelabel. This takes a mapping of old symbol numbers to new symbol numbers. If we remember the lines from the symbol file:

<eps> 0 <w> 3

We can see that <w> is mapped to 3, and <eps> is mapped to 0, so we create a single-line file named map.txt with 3 in the first column, and 0 in the second column.

echo "3 0" > map.txt

Using this file, we can now change <w> labels into <eps>.

fstrelabel --relabel_ipairs=map.txt lexicon.srt lexicon.fst

Finally, we can check that it actually worked by printing out the new FST and making sure that there are no more <w> symbols in the input.

$ fstprint --isymbols=char.sym --osymbols=word.sym lexicon.fst > lexicon.fst.txt 0 34 w <eps> 1 34 239 o <eps> 239 684 r <eps> 684 1104 d <eps> 1104 0 <eps> word 1104 1 s words 1 0 <eps> <eps>

Now we have an FST that is ready for decoding!

To run Kyfd, we need a configuration file that specifies the model that we would like to use and the various settings that we wish to specify. The configuration file must be in XML format, so first, we'll make the skeleton of the XML file, including opening and closing "kyfd" tags.

<?xml version="1.0" encoding="UTF-8" standalone="no"?>

<kyfd>

<!-- Configuration goes here -->

</kyfd>

Now, inside the "kyfd" tags, we'll put the configuration we want to specify. At first, the only options we need to specify are the location of the input and output symbol files.

<arg name="isymbols" value="char.sym" /> <arg name="osymbols" value="word.sym" />

In addition to the configuration options, we also specify the location of the FSt model (or models) that we want to use to decode. For now we'll use the single lexicon model by adding the following line:

<fst file="lexicon.fst" />

You can save this file and use it if you'd like, or you can use the config/tut1.xml file that should include this exact configuration.

In this section, we'll actually use lexicon model to create word sequences from character sequences. To do this, first we need to create a character sequence to use as our input. There's a script called separatechars.pl that will create the input for us, so let's run it and check the results.

$ script/separatechars.pl < data/train.txt > input.txt $ head -n 3 input.txt e n g l i s h i s a w e s t g e r m a n i c l a n g u a g e t h a t d e v e l o p e d i n e n g l a n d d u r i n g t h e a n g l o - s a x o n e r a . a s a r e s u l t o f t h e m i l i t a r y , e c o n o m i c , s c i e n t i f i c , p o l i t i c a l , a n d c u l t u r a l i n f l u e n c e o f t h e b r i t i s h e m p i r e d u r i n g t h e 1 8 t h , 1 9 t h , a n d e a r l y 2 0 t h c e n t u r i e s a n d o f t h e u n i t e d s t a t e s s i n c e t h e m i d 2 0 t h c e n t u r y , i t h a s b e c o m e t h e l i n g u a f r a n c a i n m a n y p a r t s o f t h e w o r l d . i t i s u s e d e x t e n s i v e l y a s a s e c o n d l a n g u a g e a n d a s a n o f f i c i a l l a n g u a g e i n c o m m o n w e a l t h c o u n t r i e s a n d m a n y i n t e r n a t i o n a l o r g a n i z a t i o n s .

We can see that the data has been transformed into a sequence of characters (each separated by a single space).

And now we're finally ready to actually use the decoder! Because we did all this preparation, using the decoder itself is actually quite easy. Simply run the following command and observe the output.

$ kyfd config/tut1.xml < input.txt > output.txt -------------------------- -- Started Kyfd Decoder -- -------------------------- Loaded configuration, initializing decoder... Loading fst lexicon.fst... Done initializing, took 0 seconds Decoding... ...................................................................................................100........................ Done decoding, took 1 seconds

If you got a message like this, the decoding worked properly. Otherwise, take a look at the error message and try to fix the problem. Common culprits are be mis-named files, or forgetting one of the previous steps. Now, let's take a look at the output.txt file.

$ head -n 3 output.txt english is a west germanic language that developed in england during the anglo-saxon era . as a result of the military , economic , scientific , political , and cultural influence of the british empire during the 18th , 19th , and early 20th centuries and of the united states since the mid 20th century , it has become the lingua franca in many parts of the world . it is used extensively as a second language and as an official language in commonwealth countries and many international organizations .

We can see that the lexicon has perfectly recovered the original words from the characters! Of course, this is an easy job because this is a closed test (we're using the same data for training and testing), but for now we know it works.

Now, let's try adding some other options. First, let's try displaying the scores assigned to the answers by adding the -output score parameter.

$ kyfd -output score config/tut1.xml < input.txt > output2.txt $ head -n 3 output2.txt english is a west germanic language that developed in england during the anglo-saxon era . ||| 15 as a result of the military , economic , scientific , political , and cultural influence of the british empire during the 18th , 19th , and early 20th centuries and of the united states since the mid 20th century , it has become the lingua franca in many parts of the world . ||| 54 it is used extensively as a second language and as an official language in commonwealth countries and many international organizations . ||| 21

Now, in addition to the output, we have a triple pipe "|||" and a number. You may remember that all the way back in Section 2, we added a weight of 1 for each word that was created by the lexicon. Because of this, the score for each sentence is the sum of these weights, or in other words, the number of words in the sentence.

Because the decoder searches for the answer with the smallest weight, weighting the sentences in this way will cause the decoder to find the sentence with the smallest possible number of words given the character sequence input. But there are actually a number of other possible answers as well. We can print some of these answers by setting the -nbest parameter, which specifies the maximum number of answers to output for each input. Let's try using it (along with the score parameter).

$ kyfd -nbest 10 -output score config/tut1.xml < input.txt > output3.txt $ head -n 5 output3.txt 0||| english is a west germanic language that developed in england during the anglo-saxon era . ||| 15 1||| as a result of the military , economic , scientific , political , and cultural influence of the british empire during the 18th , 19th , and early 20th centuries and of the united states since the mid 20th century , it has become the lingua franca in many parts of the world . ||| 54 1||| as a result of the military , economic , scientific , political , and cultural influence of the british empire during the 1 8th , 19th , and early 20th centuries and of the united states since the mid 20th century , it has become the lingua franca in many parts of the world . ||| 55 1||| as a result of the military , economic , scientific , political , and cultural influence of the british empire during the 18th , 1 9th , and early 20th centuries and of the united states since the mid 20th century , it has become the lingua franca in many parts of the world . ||| 55 1||| as a result of the military , economic , scientific , political , and cultural influence of the british empire during the 18th , 19th , and early 20th centuries and of the united states since the mid 20th century , it has be come the lingua franca in many parts of the world . ||| 55

Notice that now all sentences have an additional number and triple pipe at the beginning of the sentence. This number corresponds to the line number (starting at 0) in the input file. Because our current model only can parse the first sentence (indicated by number 0) in a single way, only a single sentence is output, but the second sentence (indicated by number 1) has several outputs. You may notice that there are small differences between the outputs for the second sentence.

Well, that's the end of the first tutorial. By now you should have a general idea about how to create a model, configuration file, and data for use with Kyfd. The next tutorial will deal with combining separate models and outputting individual components separately.